I recently tried doing an elimination diet – emphasis on the “tried.”

I think I lasted about one week, but one food I did manage to do was avoid soy. During that time, I discovered a way to prepare Asian-inspired cuisine without tamari or soy sauce.

Exciting, right? Allow me to demonstrate.

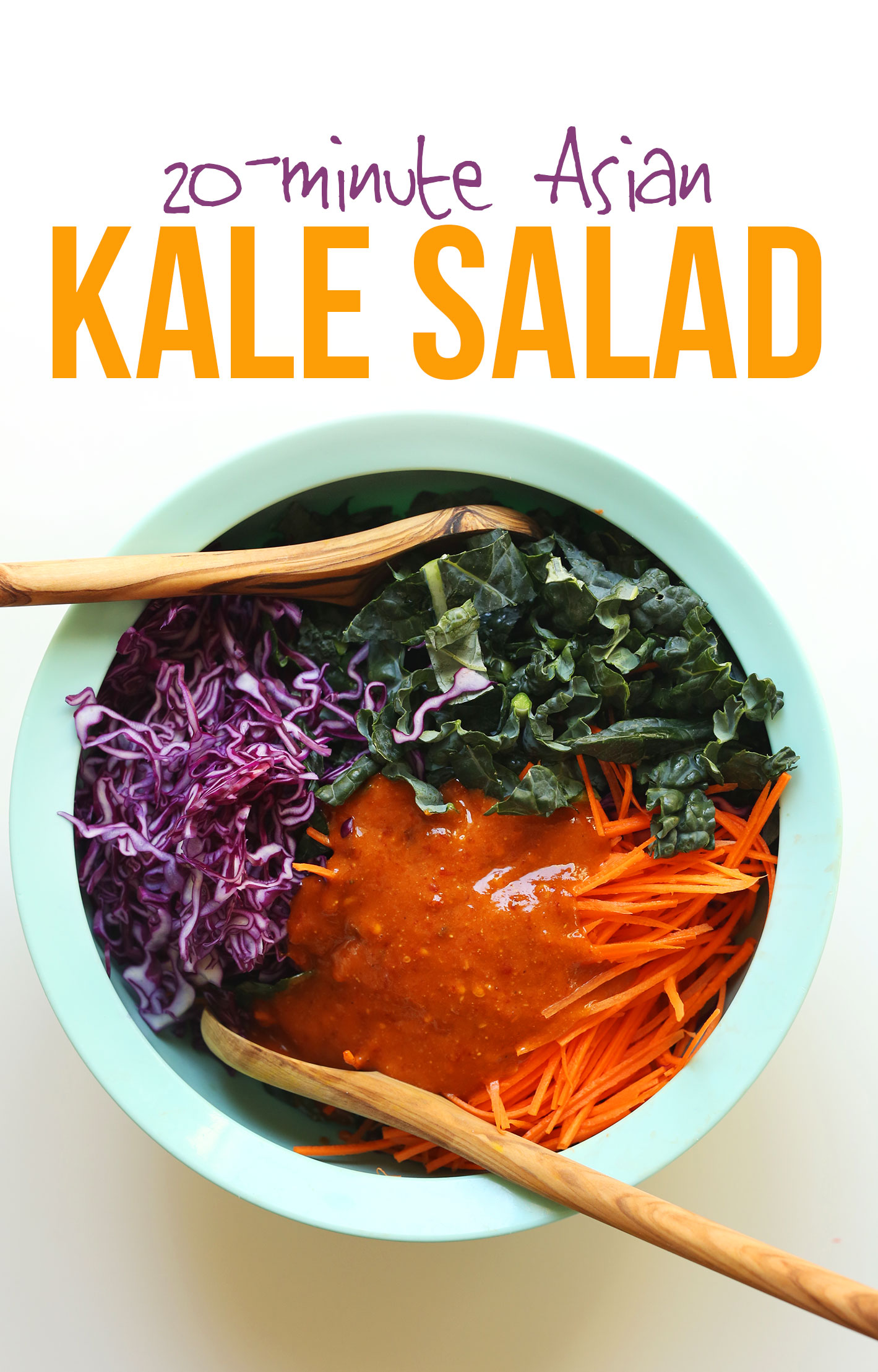

A local grocery store in Portland has this amazing kale “slaw” with carrots and cabbage, and I’ve basically been living off of it for the past month.

It’s the easiest thing to reach into the refrigerator and grab to make quick salads, scrambles, or bowls. And I’ve made my own version for the base of this salad.

The amazing part of this 20-minute recipe is the dressing. It’s a simple mix of cashew butter, chili garlic sauce, maple syrup, and sesame oil (meaning it’s soy-free!).

A sprinkle of salt (or tamari) adds a bit of saltiness if needed, but I usually skip! It’s a gorgeous bright orange hue, creamy, and spicy-sweet (my favorite).

This salad makes a delicious snack or side, but to make it an entrée, I added some miso-roasted crispy chickpeas! That’s right, I did a little internet research and found a few tips for making chickpeas super crispy and crunchy.

I hope you all LOVE this salad! It’s:

Crunchy

Savory

Spicy

Fast

Healthy

Satisfying

& Delicious

This is the perfect salad to pack for lunches, picnics, or when you’re craving a light but satisfying meal. The crunchy chickpeas add a nice amount of protein and fiber to keep you satisfied for hours.

More Kale Salads

- Abundance Kale Salad with Savory Tahini Dressing

- Kumquat Kale Salad with Tahini Dressing

- White Bean Kale Salad with Tahini Dressing

- Raw Kale and Brussels Sprouts Kale Salad w/ Tahini-Maple Dressing from Cookie + Kate!

If you try this recipe be sure to let us know! Leave a comment, rate it, and tag a picture #minimalistbaker on Instagram. Cheers, friends!

Crunchy Kale Salad with Spicy Cashew Sesame Dressing

Ingredients

CHICKPEAS* optional

- 1 15-ounce can chickpeas

- 2 Tbsp avocado or untoasted sesame oil

- 1 Tbsp white or yellow miso paste* (ensure vegan friendly // use chickpea miso if soy-free)

- 2 tsp maple syrup

- 1 tsp chili garlic sauce

VEGETABLES

- 1 large bundle finely chopped kale (organic when possible // ~5 cups as original recipe is written)

- 1 cup finely grated carrots (I use this mandolin // 2 large carrots yield ~1 cup)

- 2 cups finely shredded red cabbage (I use this mandolin // 1/2 small cabbage yields ~2 cups)

DRESSING

- 1/4 cup creamy cashew butter (or sub almond or peanut butter)

- 1/3 cup chili garlic sauce

- 1/4 cup toasted or untoasted sesame oil

- 3 Tbsp maple syrup

- 1 healthy pinch sea salt (optional // or 1 Tbsp tamari per healthy pinch salt)

Instructions

CHICKPEAS (optional)

- If preparing chickpeas, preheat oven to 425 degrees F (218 C). Rinse and drain chickpeas well. Then pat very dry – this will help them crisp up.

- To a medium mixing bowl add avocado oil, miso paste, maple syrup, and chili garlic sauce and whisk to combine. Then add chickpeas and toss to coat. Arrange on a bare baking sheet (or more baking sheets, as needed, if increasing batch size) and bake for 20-25 minutes, tossing/stirring once at the halfway point to ensure even baking. They're done when crisp and deep golden brown (see photo). Set aside.

DRESSING + SALAD

- Add kale, carrots and cabbage to a large mixing/serving bowl and toss to combine. Set aside.

- To prepare dressing, to a small mixing bowl add cashew butter, chili garlic sauce, sesame oil maple syrup, and salt and whisk to combine. Taste and adjust flavors as needed, adding more maple syrup for sweetness, chili garlic sauce for heat, cashew butter for creaminess, or salt or tamari for saltiness. Set aside.

- Add the dressing to the salad and toss well to combine. To serve, divide salad between serving plates and top with crunchy miso chickpeas (optional). Best when fresh, though leftovers keep covered in the refrigerator up to 3 days (even longer if the dressing is stored separate from the veggies). Store leftover chickpeas in a well-sealed container at room temperature for 2 days.

Notes

*Prep/cook time does not include cooking chickpeas.

*Nutrition information is a rough estimate calculated with dressing and without chickpeas.

Tiffany says

This was really good. I used whole Cashews instead of chickpeas due to not having any on hand. We enjoyed it.

Lovely! We’re so glad you enjoyed it, Tiffany. Thank you for sharing! xo

molly says

excellent flavors and easy. loved the crunch of chick peas. thank you

Yay! We’re so glad you enjoyed this recipe, Molly. Thank you for the lovely review! xo

Noel says

Loved it. I used baby kale. Was still hood day 2.

Woohoo! Thanks so much for the lovely review, Noel. So glad you enjoyed!

Deb says

Really satisfying and tasty salad I will definitely make again, but beware the spice! I personally will reduce the chili garlic sauce by a third or maybe even by half the next time I make it 😂😂 getting through an entree size portion of it at the current spice level is a challenge for me

Thank you for sharing, Deb! Sorry to hear it was a bit too spicy for you! Glad you still enjoyed it overall! xo

Sophie says

This salad was fantastic and will be on regular rotation! I loved the spicy dressing. I omitted the cabbage because I didn’t have any. I did make the chickpeas and they were delicious. Lots of depths of flavors. I messaged the kale with the dressing for a few minutes because I like that texture better for raw kale.

I imagine it would keep well for a few days, but will have to try that another time because it was all eaten in one sitting :) thank you!

We’re so glad you enjoyed it, Sophie! Thanks so much for the lovely review! xo

Sierra says

Great meal prep salad to have on hand for a couple of meals. Obsessed with the dressing, I’ll use it on other foods as well.

Yay! We’re so glad you enjoy it, Sierra! Thanks so much for sharing!

Hailey says

Loveee this. Another MB WIN. It was just a leeetle spicy for us. Next time will lessen the amount of chili-garlic. Otherwise, really just perfect. Amazing flavor profile. We made the chickpeas and they were great. (Took them out at 15 mins instead of 20) Also, topped with avocado, per usual. Thank you!

We’re so glad you enjoyed it, Hailey! Thanks so much for sharing!

Liz Sunderland says

The dressing is so good, I wanted to eat with a spoon – all by itself! (I used almond butter.)

Whoop! We’re so glad you enjoyed it, Liz! Thanks so much for the lovely review!

Joann Bauman says

Loved this easy, healthy salad

Thanks so much for the lovely review, Joann. We are so glad you enjoyed it! Next time, would you mind leaving a rating with your review? It’s super helpful for us and other readers. Thanks so much! Xo

E. Davis says

Is the kale blanched in the photo?

No, it’s raw kale.

Roxie says

Forgot to add a copy-edit correction to my precious comments: In the directions, #3 should say “To make the salad dressing, “ not “To make the salad,”

Wonderful dish; will make again and again!

Roxie says

I prepared this exactly as directed and it was DELICIOUS. I packed it for lunch four days last week, adding the dressing to that day’s serving each morning. Great crunch, great flavor! I made the chickpeas, too and will again, next time putting parchment paper on the baking sheet to make cleanup easier.

The dressing would be delicious on noodles and fresh veggies, too, for a cold noodle salad!

Thanks so much for the lovely review, Roxie. We are so glad you enjoyed it! Next time, would you mind leaving a rating with your review? It’s super helpful for us and other readers. Thanks so much! Xo

LMM says

I’ve probably already rated this recipe in the past, but it’s so good I’m gonna do it again! I make big batches so I can eat it multiple times in a week. It’s almost like a savory salad sundae if that makes ANY sense at all! The combined flavors just make it so tasty and it’s super filling. Even my non kale eating husband loves it!

Aww, thanks so much! We are so glad you enjoy this recipe! xo

Kristen says

This salad dressing is amazing! I love it sooooo much! Thank you!!!!

nikki says

Looking forward to making this!! Have you tried making a recipe for chili-garlic sauce yet? Its in a lot of your recipes that I love to make, but the Huy Fong brand has some undesirable ingredients ….. curious if the Taste of Thai brand Garlic Chili Pepper sauce has the same flavor as the typical chili-garlic sauce.

nikki says

Annnd I just saw that Taste of Thai garlic chili pepper still has a sodium benzoate preservative and xantham gum…. anywhoo …. a MB Chili-Garlic recipe would be the bomb !… im guessing finding the right red peppers might be the challenge …

Great idea, Nikki! We haven’t tried it but will add it to our ideas list. Thanks so much!

Shannon Carpenter says

This was SO INCREDIBLE! I am making this for the 4th time in a month, as I type.

The first time I made this, the flavor profile was PERFECT. A healthy amount of heat, but not overbearing. Oddly, I cracked a new jar of chili garlic sauce on the 2nd time making (Huy Fong Foods brand) and the chili heat was quite a bit higher than what I got from the other jar (and I LOVE spicy heat in my food) — no idea why! So this go around, I halved the chili garlic paste, and it seems to be back to perfectly balanced in terms of the flavor profile.

Shannon! We’re so glad you enjoyed this recipe!

Karen says

Loved this slaw! Sweet, spicy, crunchy and creamy… Have made a couple of times now. The salad, undressed, keeps beautifully in an air tight container in the fridge for about 3 days. I have made it in bulk on a Sunday night for quick and easy lunches through the week. The miso-roasted chickpeas are best eaten on the day but still tasty, albeit a little less crunchy, on the next. I have not yet been able to get a hold of the official chilli garlic sauce mentioned so I made my own from chilli powder, chilli flakes, sriracha, rice bran oil, minced fresh garlic, sea salt and finely diced long red chilli. The whole recipe is a flavour bomb! A new favourite. Thanks for sharing Dana.

Megan says

I can’t stop eating this, so yummy! I added cucumber, sweet potato and almonds (instead of chickpeas). Will definitely make this again.

We are glad you enjoyed it, Megan!

Nikki says

This was a very delicious and refreshing salad! I tweaked the recipe a bit to limit the amount maple syrup that was used and it still turned out fantastic! Will definitely make again <3

Jackie says

I made this salad tonight to accompany the Crispy Baked Peanut Tofu and really enjoyed it! I was lazy and thus left the carrots out (too much else going on with the 2 recipes), but I loved the salad and it was so crunchy with the kale and cabbage. Great recipe to add to our salad rotation!

Jill says

The dressing is soooo simple yet so delicious!!

Oro says

Made this tonight and it was delicious. I would never have thought to use blackbean as a marinade for a salad. I used crunchy cashewnut butter because that’s all I had, worked well enough for me.

And the chickpeas were almost finished before they had crisped because they were so tasty.

Thanks Dana

Shelby says

I combined your Thai Kale Salad recipe with this one a few weeks ago, and it turned out so so good! Even my boyfriend who isn’t into salads really enjoyed this. Excited to make this for supper again tonight! :-)

Rachel says

I just made this, and although I have not tried it all mixed up yet, the sauce is delicious. To thin it out a bit, I added some rice wine vinegar and i am in LOVE. Can’t wait to eat this dish tonight and luckily I made enough for lunches :)

Braydi says

Hello Dana,

I made this salad along with a — ginger, onion, and garlic rice noodle soup (that claimed to pair perfectly with the salad) — a couple of months ago, and I can’t seem to find the soup anymore!

If you know the whereabouts of this recipe, it would be greatly appreciated!

Thank you for all of your wonderful recipes.

Maria says

This salad is so good! The chickpeas are amazing. I didn’t have canola or untoasted sesame oil at home, so I used virgin olive oil. Only one tbsp though. I didn’t add any oil to the dressing and added a bit of water and sesame seeds instead. I also used less chili garlic sauce for the dressing (60 gr.) and it was still a little bit too spicy for me, but delicious anyway. I’ll try with less chili garlic next time because I’m doing this again for sure.

Erin says

This is no ordinary salad! This is FANTASTIC! The flavors are so yummy together and its beautiful with the deep green kale, carrot and purple cabbage. The crunchy garbanzo beans on top are the perfect exclamation point. If you haven’t tried this one yet…you NEED TO! This is a new go-to salad for us and will be great to take to pot lucks as well. Although the recipe makes it sound like this doesn’t keep well, we find the flavors to be just as yummy the next day. SO GOOD!

Ella says

This was really good! But definitely spicy! I followed the recipe almost exactly, but put a smidge less chili garlic sauce than it called for, because I’m pretty sensitive to spice, but I couldn’t think of what to replace it with. Next time I’ll probably leave it out altogether. The chickpeas didn’t turn out as crunchy as I wanted, but maybe I didn’t pat them down enough to get out the moisture. Overall a solid simple salad.

Proliant says

This looks so delicious and healthy! Great share. Thanks so much for the recipe.

Laurens Burger says

Absolutely amazing dinner! Has kept me so full and still have load left over! I used the peanut butter – call me Mr. Cheap – but it worked in absolute charm. I can’t wait till my little belly can take another load!

Migle says

I made this today and oh my GOD was it delicious! I could not stop eating it, it was just so crunchy and satisfying. The dressing is an absolute winner, too. I cannot wait to make this salad again!

The only modification that I made was reduce the oil and maple syrup servings to cut on the calories, but it was still super creamy and all coated!

10/10, do recommend.

mrskia says

Just made this recipe for dinner last night!! It was absolutely delicious and it will be on rotation in my home. It was so amazing, I couldn’t wait to finish the leftovers this morning for breakfast. I have shared this recipe and your website with my sister. Can’t wait to try your other recipes. Thank you!!

Zhang Li says

This recipe seems great, but is it hard to specify the country or region? Asia is a HUGE continent with about half the world’s population, and food flavors differ so widely. In China (where I’m from and currently reside) we have different cuisines per province, so you can imagine generalizing something as Asian is pretty reductive. I think it would be less disrespectful if you were to treat Asian cuisine as a whole by its separate demographics, as many people say ‘Asian’ when they mean ‘East Asian,’ and people forget that India, Afghanistan, Pakistan, Indonesia, etc, are all part of Asia. Bottom line, labelling every dish with an Asian flavor as Asian without referring to its specific country/demographic is ignoring the differences that make Asia so wonderful, and I know this is different in America, but food is such a huge part of our culture, and this seems disrespectful. I know you’ll probably delete this comment, but please take this into consideration. I love your site and intend to keep using it, but I do not like the way that my country’s food is treated.

Desert Panz says

Simply put, delicious. Only modifications I made were adding orange, yellow and red short sweet peppers and multi-color organic kale.

Angel says

Can’t wait to try this out! Thank you sooo much for including weight measurements as well. It’s so much easier to get a good result that way instead of when a recipe just says “one bunch” or “one head of cabbage” since the sizes can vary.

JoAnne says

Oh, my Gosh!….this salad is my new addiction!

I can’t wait to try more recipes?I have made this salad and Spanish qinuoa stuffed peppers….both solid winners?

Kayla says

I’ve made this salad 5 times now. My dad who hates kale was converted because of this salad. The chickpeas are a serious win. Thank you so much for this recipe!!!!

Jenni says

Delicious, my chili garlic paste isn’t hot at all so I added some sriracha. I used a bag of TJs organic baby kale, baby chard & baby spinach instead of straight kale. Easy and tasty. Will definitely make again.

Breanna Shirk says

Hi Dana! I’m trying a vegetarian version of the Whole 30 diet, which is slightly similar to an elimination diet. As I’m sure you know, it’s a bit tough as a lot of the Whole 30 recipes are meat-based, but I’ve found that a lot of your recipes can be easily adapted to work well for this. I LOVE anything spicy (which is why I’m such a fan of your recipes) but the preservatives in most chili garlic sauces won’t work. Do you have an easy at-home alternative?

Adrienne says

We love this recipe- it amazing! After making it, my b.f. and I are obsessed. Thank you again- not sure how I’ve been living without that chili garlic paste!!!

Kelli H says

Happy to hear your avoiding soy. The more I research it the more I want to stay away from it and it’s in everything! Very hard to avoid so I mainly try to limit my consumption as much as possible. I’m surprised you didn’t like coconut aminos I literally can’t tell a difference when I’m cooking.

Must’ve been the brand I tried…

Missy says

Another hit! I made this last night and it was hard to save enough to bring to lunch for leftovers today, because we wanted to eat it all. Very tasty! Next time I might add some more vegetables (red peppers, broccoli, baby corn) to make it a heartier main dish, but this is perfect as is! As far as the comments above concerning the spice level – I think you just have to use your own judgment. My garlic chili sauce is crazy spicy, so I have to adjust according to my sauce and my preferences.

Prajna says

Hi,

The recipe looks great. I don’t eat garlic. Could you recommend any substitute for it in the recipe?

I usually use asafaetida as a spice instead of cooking onion and garlic.

Thanks for your help and great ideas!

Hi Prajna, I’m not familiar with asafaetida but if it has worked for you previously it’s worth a try! Otherwise, look for another chili paste that doesn’t have garlic in it! Let us know how it turns out!

Haley says

Really solid salad recipe. Such a great way to get rid of that small wedge of red cabbage that has been sitting in the fridge for weeks. I plan to make it again! The only adjustment I would make is reducing the amount of oil in the dressing (I used toasted sesame oil and it was a bit overpowering) or omit it completely. I think just a small amount (1 teaspoon) for flavoring would be sufficient since there is a lot of nut butter in the recipe. Water to thin. Oh it was also a bit spicy for me (I have moderate spice tolerance). I would reduce the amount of sriracha to about 3 tbsp (recipe is about 5, I used 4).

Stephanie says

This was way too spicy for me. I couldn’t eat it. But my husband liked it. He likes incredibly spicy foods and even he thought it was hot.

Hollie Levy says

This was amazing. I added quinoa and roasted cauliflower along with the chickpeas. My husband and I both loved it. A must make!

honey says

this looks DELICIOUS!!! Such a perfect summer salad.Thanks for a great new recipe.

dark valkyrie says

it looks fantastic!

Iris says

Thanks a lot for this chickpea idea! Never thought about roasting them before. They taste great this way.

Lavues says

Great recipe, plus it’s easy to make too!

Jessica says

I was so excited to read your new recipie as I am allergic to soy. I was so sad to learn that you used Miso as a main ingredient in making the roasted chickpeas. I hate to burst your bubble, but you didn’t avoid soy. Miso is fermented soybeans.

Chris says

Jessica, miso is not limited to soybeans. Try chickpea or azuki bean miso. My favorite brand is South River but I’m sure there are other companies that have soy-free offerings as well.

Under the notes:

*To keep this salad soy-free omit the chickpeas, or sub the miso for something like sea salt or extra chili garlic sauce.

Honey, What's Cooking says

This looks so delicious… bowls are so in right now… salad bowls or carb bowls. Very colorful!

The Wooden Spoon says

The miso roasted chickpeas sound amazing!

Linda says

Although some miso is made from soybeans, the soybeans are fermented. I’ve read that soybeans aren’t so great healthwise UNLESS they’re fermented (as in miso and tempe). (The soybeans in tofu are NOT fermented.)

Debs says

Love that you use a mandolin for those veg! I’d never be without mine. It’s the ultimate lazy girl kitchen accessory.

Cassie says

Asian flavors are the BOMB DOT COM. Definitely wanting to make this salad!

Susan says

This sounds really tasty. I’m always looking for kale salad recipes and the simple dressing without the extra soy is spot on. I like the added carrots, I think we’ve sort of gotten away from using the humble root.

Jen says

Soy-free, chickpea miso would be perfect in place of the soy based miso.

Dana says

This sounds wonderful! Do you have any suggestions for a cashew butter substitute since my children have severe peanut and tree nut allergies? Thank you!

Samantha says

Same in this house! We primarily use sunflower seed butter or soy nut butter, which would probably sub better for the cashew butter here.

Mary says

Miso is made from soy.

Under notes:

*To keep this salad soy-free omit the chickpeas, or sub the miso for something like sea salt or extra chili garlic sauce.

Nunzia says

It looks amazing and super easy to make. I can serve that with salmon. =)

Smart idea!

Irene says

You can also get miso made from chickpeas! Just in case you wanted some chickpeas on your chickpeas ;)

Ha! Great tip! I didn’t know that…

Erin says

Are you talking about the slaw at New Seasons? Man I love that grocery store. I’m really into the sweet potato noodles from their deli. Made with sweet potato starch, not actual spiralized sweet potatoes (though I think the dressing they use has a small amount of soy in it). Also, their cauliflower slaw!

And, echoing the sentiment above about coconut aminos. They’re a little more costly than soy sauce or tamari but they are a great substitute if you are avoiding soy products.

Love New Seasons! And yes, I love their kale, carrot, cabbage slaw! I’m hooked!

Meg says

Hello,

I think you can use miso made of rice or wheet Koji instead of soy bean, then still many food can be soy-free, including this super crunchy chick pea… How would you say?

Pat Hill says

I saw this while I was at work and picked up the needed ingredients on my way home! I am enjoying the spicy goodness right now!

Thank you!

Yay! Thanks for sharing, Pat!

Hannah says

Yum! If you continue to stick to a soy-free diet, have you tried coconut aminos? After I realized my body thinks that soy is the devil, I switched. Such a fantastic replacement for soy sauce!

I did! But unfortunately I didn’t like it : (

Shevon says

Thanks for a great new recipe.

Crystal Elston says

Looks Amazing!!! Need to stop at New Seasons on the way home anyway, so might as well pick up the ingredients. The sauce/dressing has me totally intrigued. AND I absolutely Must do those chickpeas. I already have miso ready and waiting. Thanks as always for great recipes.

Love New Seasons! Good luck, Crystal! xoxo

Carole says

Oh my – this looks DELICIOUS!!! Such a perfect summer salad, so fresh and flavor explosion!! Can’t wait to try this :)

Genevieve says

look so yummy!!!

Katrina says

I bet those chickpeas with that sauce is the best kind of flavour explosion!! So delicious!

hannah says

A kale salad was on my meal place next week and I’ve been searching for a recipe. Very excited about this one!